The Complexities of Responding to Student Writing; or, Looking for Shortcuts via the Road of Excess

If a way to the Better there be, it exacts a full look at the Worst.

-- Thomas Hardy

The road of excess leads to the palace of wisdom

-- William Blake

Abstract: In all academic disciplines college teachers respond to student writing with shortcuts—checksheets, correction symbols, computer style checkers, etc. But while these methods save teachers time, do they help students improve their writing? A survey of research into teacher commentary, conceived of as a contextual discourse activity, initially questions the efficiency of many shortcuts because it finds complexities in all activity areas, in regulation (criteria, rules of genre and mode, disciplinary styles, and standards), consumption, production, representation, and identity. The research, however, also recommends particular shortcuts and methods of revising them for better efficiency and effect. It especially recommends restricting the volume of teacher commentary in ways that are task, discipline, and learner specific.

The more complex tasks are, the greater the temptation to simplify them. It is a temptation that faculty responding to student writing have not always resisted—hence prêt à porter devices such as checksheets, correction symbols, mnemonic acronyms, weighted criteria scales, peer-critique handouts, and computer feedback software. Therein lie two paradoxes. Simplifying a complex task may take the challenge and fascination out of it and end up making it laborious in a new way, boring and repetitious for both teacher and student, on the order of vacuuming the house. The other paradox has more serious implications and is the major concern of the present essay. Latent in a complex task may exist labor-saving methods, but how can they be extracted except through a full look at the task's true complexity? That takes labor, precisely the commodity that people who need shortcuts have in short supply. Sticking to current shortcuts postpones that full look, yet without it there is no guarantee they are the most efficient.

Perhaps it is time for an examination of the problematics entailed when teachers, anywhere in the university, respond to student writing. By response, I should emphasize, I do not mean the mere grading or evaluating of a piece of writing but further the recommending of ways to improve it. How can that formative act of response be made more workable, for teacher and for student? My answer can be encapsulated by rewriting Thomas Hardy's famous line in defense of "meliorism": If a way to the Efficient there be, it exacts a full look at the Complex. When it comes to the obligation to put words to a student's words, teachers in all disciplines, those draft horses of instruction, might well choose William Blake's road of excess—excess of understanding.

Obviously my argument is not that shortcuts in response signal poor teaching. Among other things that argument would be hypocritical on my part, since one such labor-saving method, called by its author "minimal marking" and still in wide-spread use after nearly a quarter of a century, was devised by myself (Haswell, 1983). My argument here is that if we want shortcuts (and who doesn't?) then we should find those that are doubly workable—both labor-effective for the teacher and learning-efficient for the student—and that a quick start to finding them is through an exploration of the true complexity of teacher response to student writing. With luck the pay-off will be directions for new solutions and for improvement, if not trashing, of old stopgaps.

My plan is to survey the major components of the activity of teachers responding to student writing, with an eye toward complexities that have been found there by researchers. In doing so I run a risk. That is of alienating some faculty in a way I do not wish. As we all know, teachers in all academic fields put in their hours gracing the margins of student writing with commentary. Chemistry lab reports and health-care case studies and mathematical proofs, when written by students, beg for writerly advice no less than first-year English-composition essays. But academic genres, with rhetorical aims and strategies peculiar to the discipline, sometimes beget distinct instructional response. Differences in the reception of disciplinary discourse, as we will see, are a major source of some of the complexities under review. Further, I am afraid that the urge to simplify may be stronger when teachers think about their own discipline's genres and teaching practices. Familiarity breeds temptation—the temptation to cut corners. It is true that researchers have sometimes found faculty across academic divisions, including English, ranking or otherwise sorting the criteria for evaluating writing in rather similar ways (e.g., Spooner-Smith 1973; Michael et al., 1980; Eblen, 1983; McCauley et al., 1993; Smith, 2003), but we will see that as often researchers have found sharp differences in understanding and application of the twists and turns of response. The risk is teachers imagining that when research is cited deconstructing their distinctive response to writing, their discipline is being put down. I can only trust everyone will soon notice, as the findings accrue, that the even gaze of research has observed pretty much the same curiosity in every field, that the ecology of response—its full human, social, and institutional context—is more complex than the customary practice of response seems to warrant.

A Model, Sufficiently Complex, For Surveying Response

Why is instructional response to writing so complicated, so problematic? Not surprisingly, there are any number of answers to this question, depending upon the questioner's angle of approach. Response integrates many activities, among them a reader trying to interpret and understand a text (Kucer, 1989), a mentor encouraging a sometimes resistant apprentice (Young, 1997), and a person socially and contextually sizing up another person (Bazerman, 1989), interacting with that person (Anson, 1989), and trying to collaborate together on a task (Martin & Rothery, 1986). As new approaches take center stage, new answers no doubt will accumulate.

For the purpose of this essay, my own answer derives from theories of discourse acts. I want to look at instructional response to writing as an activity supported by and supporting normal discourse practices, an activity no different and no less complex than recommending a book or answering an e-mail. Response, of course, has long been understood in terms of its ecology or its embeddedness in group, institutional, and social practices and constructions (Lucas, 1988; Gottshalk, 2003). Discourse activity theory has added the complication that as all human practices, teacher-student interactions, including teacher response to student writing, are mediated by cultural tools, especially language but not exclusively language (Russell, 1995; Evans, 2003). The advantage to a discourse activity understanding of response is that it locates and embraces a breadth of interacting factors. It will respect, for instance, the student's perspective as well as the teacher's; will consider outcomes equally in terms of the learner, the teacher, the course, the academic department, or society at large; will treat time-on-task on a par with self-image; will accept technologies of response that arose with the post-Civil War pencil eraser and the post-Vietnam War style checker. The analysis of cultural discourse activity assumes a field dynamics, and a vision of teacher response as interactive and intermediating with everything in its surround is what I need for this examination of research findings and its main argument that such response is anything but simple.

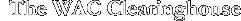

For my survey, let me borrow a recent model of cultural discourse activity that seems comprehensive yet neither too detailed nor too simplistic, Paul du Gay's "circuit of culture" (1997). Indeed it was originally designed for college students, organizing at least three volumes of a series of cultural-studies textbooks published by London's Open University. In this flow chart (Fig. 1), "through which any analysis of a cultural text or artifact must pass if it is to be adequately studied" (du Gay, 1997, 3), language practices interrelate dynamically through five activity nodes: consumption, identity, representation, regulation, and production. Application of the model does not require specialist knowledge. As a test illustration, take an 14-year-old who reads and then buys a t-shirt that says "SCHOOL SUCKS" (consumption) because it appeals to her vision of herself (identity) and next day wears it to class to make a statement about herself and perhaps to please her friends (representation). The principal soon hears about it from her teachers and with hardly a word sends her home to change clothes (regulation). It is not long before the company making the t-shirt becomes alarmed at public reaction and shelves that particular slogan (production). A cooked-up example, certainly, but not that far removed from the actual discursive chain of events jump-started last year by a college junior who submitted a paper to me with borrowed language that I readily traced with the help of Google.com. At every node in du Gay's "circuit of culture," response to language may operate. As may efficiency in response to language. The 14-year old probably admires the economy of the two-word sentence in expressing her resistance to school. The principal probably prefers just sending her to her parents rather than giving her a lecture.

Figure 1: The Circuit of

Culture, from Du Gay, 1997, Production of Culture/Cultures of Production

Regulation, the Epicenter of Instructional Response to Writing

I start with the factor of regulation, as it is perhaps the basic motive for teachers and their response to student writing. The term "regulation" may seem too Draconian for a service that teachers in all disciplines view as beneficial and perhaps even freeing for students. But beneficial for what purposes? Freed in what directions? Response is regulative because it hopes to move novices and their writing toward some more mature psychological, professional, social, or cultural ends. This is obvious with commentary that is corrective. "Your point is unclear" does its small part to keep obscurity out of public discourse. But even response that indicates no more than approval or liking (Elbow, 1993) reinforces some end—in that case an end already achieved—and thus helps regulate discourse around it. Back of all instructional response lie at least four muscular regulative apparatuses, intricately fused: criteria, or teacherly notions of good writing; rules of genre and mode, or parameters erected by the established history of particular discourse forms; disciplinary styles, or parameters erected by professional groups; and standards, or levels of writing achievement set by social groups. Each is elaborate. Interplay, constraints, and contradictions among them are rife.

Criteria provide the stock replies every teacher can call up at will, often cannot help but call up. Criteria comprise nothing less than a vast armory of lore that ranges from knowing the correct spelling of "existence," to feeling a break in flow for lack of a "this," to judging that a summary paragraph too much repeats the abstract and will offend the reader in the field. The size of the store can be judged by the thickness of usage handbooks, scores of which are printed, reprinted, and revised every year. Even taxonomies of criteria are so elaborate they look biological. Mellon (1978) identified 34 "compositional competencies," each of which can be judged lacking in a piece of student writing. An international cross-cultural study of school writing located 28 main criteria used by teachers (Purves & Takala, 1982); Grabe and Kaplan (1996) reduced second-language competencies to 20 main categories encompassing 47 distinct traits. Broad (2003) found writing teachers evaluating first-year writing portfolios with 46 textual criteria, 22 contextual criteria, and 21 others. Although, as I have said, faculty across the campus tend to agree on overarching readability criteria (unity, focus, clarity, coherence, etc.), there can be substantial differences in they way the terms are defined and applied and in the way other criteria are prioritized. Science teachers are more willing than humanities teachers to excuse stylistic infelicities if the content is accurate -- a finding of long standing (e.g., Halpern, Spreitzer, & Givens, 1978; McCarthy, 1987). Arts faculty like the term "creative," humanities faculty "eloquent," science faculty "analytical" (Zerger, 1997), predilections that can underlie rater disagreement in cross-campus writing-assessment programs. The same problems occur within academic units. Professors of business writing agreed that "wordiness" was an important criterion in judging business letters yet gave differing grades to the same letter, in part because they did not agree on a definition of the term (Dulek & Shelby, 1981).

Regardless of discipline-specific preferences, good writing traits hang over the head of teachers like a mort main. Criteria are long lived ("paragraph unity" has spanned four centuries now) and wield the force of etiquette. The Harbrace College Handbook, into its 57th year and 13th edition, has an appendix of 73 abbreviations for teachers to use in responding to student writing. The need to keep the abbreviations the same, in fact, kept the editors from updating the criteria (Hawhee, 1999). Whether teachers rely on Harbrace or some disciplinary style manual, most find themselves using correction symbols and abbreviations—¶ for "You need to start a new paragraph here," "SF" for "You have committed a sentence fragment," "ref?" for "These are facts are not common knowledge and you need to cite your source for them." The custom is evidence of the multiplicity of criteria, which cover the field so thoroughly that any passage of student writing will scare up a flock of them. The custom also betrays the plight of responders, who hope that the makeshift of a limited correction lexicon, often ill understood by students, will control this flock that persists in coming back, season after season.

Genre and mode rules complicate matters because they mediate or undercut the assumed universality of criteria. This is a fact no amount of pleading for global writing traits will dispel. Murray (1982a) may argue that six qualities bridge all discourse modes, including fiction and nonfiction: meaning, authority, voice, development, design [organization], and clarity. But this is a position easy to counter, for instance, the universality of "voice" with the voicelessness or impersonalization of technical documentation, or the universality of "meaning" with the deliberate non-sense of surrealist poetry. Besides, all of Murray's qualities are mediated quite differently by different genres and modes. In a personal journal "authority" may be furthered by emotive language, in a travelogue by proper names, in a professional article by intertextual reference, in a public notice by organizational affiliation. College teachers do not have trouble recognizing these differences. But their students do (McCarthy, 1987), in part because there are deeply rooted differences in the kind of genres assigned in different departments, even with genres that are supposedly cross disciplinary and WAC legitimized. Hobson & Lerner (1999), for instance, found write-to-learn principles cited as a purpose for course assignments by 91% of their arts and science faculty, 44% by their pharmacy practice faculty. Also teachers often do not have a ready synopsis of the rules for the particular genre they are asking students to write. Further trouble lies in teachers applying response that is presumably consistent since it is regulatory, when their writing assignments include different genres with different discourse rules.

Disciplinary style adds to the trouble. All disciplines have their own Harbraces, both literally in the form of published style manuals and intuitively in the heads of their practitioners, and the rules governing rhetorical acceptability differ from one field to the next as much as the Council of Biological Editors citation style differs from that of the Chicago or the American Psychologist Association. From usage as minute as the way the colon is used in titles (the absence of a colon in my own title follows a slightly antiquated convention in the humanities) to usage as pervasive as the way evidence is gathered, respected, and presented, disciplines regulate every aspect of discourse in a bewildering variety of ways. For instance how should a teacher in a general-education course respond to the abundance of unqualified generalities common in first-year college writing? Political-science discourse seems to recommend such certainty (Ober, Zhao, David, & Alexander, 1999), philosophical argumentation encourages more formal qualification (Jason, 1988), science reports expect more hedging in the presentation of data (Luukka & Markkanen, 1997), historical papers more hedging in the claims (Meyer, 1997).

One could argue that first-year college students, most of whom have hardly imagined a major for themselves even if they have declared one, are unready for disciplinary styles, but then by what style are they to be regulated? There is no ready, or efficient, answer to this complication. Needless to say, in their first-year content courses students often find disciplinary regulation dispensed anyway, piecemeal and ragtag. Once in a study of the effect of first-year English composition courses upon essay-examination performance in other courses, my co-researchers and I were startled to see our ratings of the students' introductions in an environmental science course drop sharply from the first to the second examination. Between the two tests English-department writing teachers had trained the students to compose introductory paragraphs in essay-exam questions, while at the same time, the environmental-science teacher told students as he handed back the first test that when he saw an introduction in an examination essay he assumed the student didn't know the answer and was bluffing. He wanted no introduction at all. Here a reasonable response shortcut, advising students about the rules before they do the writing, was not as simple for the students to absorb as the teacher may have thought.

Standards, as this incident shows, mediates regulation and response with its own conflicting rules. Were the English teachers now obligated to teach and judge introductions in a way contrary to their tastes and beliefs? Couldn't the environmental-science teacher have allowed a sentence or two by way of introduction? How was I supposed to respond one time when my advanced-composition students, and I'm not making this up, told me that their journalism teacher had said no sentence could have more than one comma? Standards have a way either of over-regulating or under-regulating teacher response to writing. They over-regulate when they force teachers to focus their response on a limited number of writing traits, excluding traits the teachers know are important and highlighting traits they know are inconsequential or even vitiating. This bind is not trivial. Daily thousands of teachers, in and beyond the schools, are penning or voicing response to student writing under the pressure of state and institutional mandated tests, an effect justly given the bodily term "washback." Teachers who ten years ago were disapproving of the five-paragraph theme, an essay organization even high-school juniors recognize as juvenile, today are praising students for accomplishing it.

On the other hand, standards under-regulate response because they tend to be overgeneralized (the environmental-science teacher's standard for introductions is not typical). Take the Writing Program Administrators' "Outcome Statement for First-Year Composition," a list of 34 writing competencies students should have mastered before they are sophomores (Writing Program Administrators, 2000). (Some of the WPA competencies, it goes without saying, were not mentioned by Mellon, 1978 in his own list of 34 some twenty-two years earlier.) Among other skills, the WPA-certified student will be able to "adopt an appropriate voice, tone, or formality." But rhetorically voice, tone, and formality can be achieved in a hundred different ways, including frequency and kind of hedging. "Appropriate" just brings back the confounding of genre, mode, and discipline, and adds the further confounding element of level of accomplishment, confounding because unspecified. At what point does a student's performance become inappropriate? It depends on a raft of factors, including discipline and teacher. Beason (1993) found the instructor of a writing-enriched course in business law devoting 32% of paper commentary to the criterion of focus, yet in three other writing-enriched courses, in journalism, dental hygiene, and psychology, the instructors reacted to focus as inappropriate in their students' papers less than 10% of the time.

Nascent in writing standards is the concept of "acceptable level" or "irritation score," also called "error gravity" by ESL researchers. It leads to some of the most complex of writing response interactions. For instance native English-speaking instructors thought second-language English errors with Greek students writing more serious when the mistakes impeded comprehension, while native Greek-speaking instructors thought the errors were more serious when they were "basic" or mistakes that writers should know how to avoid (Hughes & Lascaratou, 1982). Plenty of other factors than native language mediate error gravity, of course, including sex of teacher (Hairston, 1981), perceived responsibility for the student (Janopoulos, 1992), academic field (Kantz & Yates, 1994), and rank or years teaching (Kantz & Yates, 1994)—as well as personal idiosyncrasy. An undergraduate literature teacher of mine entered only one kind of mark on my papers, Gargantuan commas that cut down through five lines of my text. Now that's grave. Workable shortcuts for teachers responding to error gravity and other issues of standards are not going to be found in the WPA Outcomes, or anywhere else that I know of.

In short, regulation leaves too much open, gives the teacher options too numerous with boundaries too flexible. This is where the paradox of response and complexity becomes clear. The more complex writing response is seen to be—and regulation is only one of five main factors—the less chance, it would seem, of finding simplified methods that will let teachers respond to students in productive and efficient ways.

Consumption, the Functionality of Response

Inevitably the terms "productive" and "efficient" route us toward a new node in the circuit of culture (Figure 1). There is nothing in the nature of regulation ensuring that the rules and conventions will be followed or even understood by students. Consumption, at least in terms of discourse practices, operates within the realm of functional discourse processing, working through focus on language medium and on reader comprehension, assimilation, recall, and application. In terms of teacher response, consumption asks some hard questions. Is the communication channel between teacher and student viable? Are there better channels? Does instructional response actually lead to its purposeful end, improvement in the student's writing?

To answer, researchers have watched teachers sit in conference with their marginal comments on the paper between them and the students, have watched them read second drafts of first drafts that they have previously responded to, have queried the students over the meaning they draw from teacher comments, have charted the student's writing improvement, or lack of it, paper after paper, week after week, in relation to the marginal commentary. Their findings are often discouraging. To begin with, although students, especially second-language students, may pay more attention to comments on drafts than on final papers (Ferris, 1995), overall they don't consume teacher response very well. Research into student perceptions have demonstrated this again and again. Students are avid for commentary (though they may first look at the grade), but when forced to explain their teachers' comments, they misinterpret a shocking portion of it. When forced to revise, they assiduously follow the teacher's surface emendations and disregard the deeper suggestions regarding content and argumentation. They prefer global, non-directive, and positive comments but make changes mainly to surface, directive, and negative ones. In sum, they want lots and certain kinds of response, but have trouble doing much with what they ask for (Hayes & Daiker, 1984; Ziv, 1984; Straub, 1997; Winter, Neal, & Waner, 1996; Underwood & Tregidgo, 2006). Of course, some of the blame rests on teachers, who often think they are positively emphasizing central qualities such as reasoning, genre form, and reader awareness while in fact the bulk of their commentary dwells negatively on surface mistakes and infelicities of syntax and word choice (Kline, Jr., 1976; Harris, 1977; Connors & Lunsford, 1988).

To make matters worse, the problems lie not so much with students or with teachers but with the interaction between them. Some of the most disturbing investigation into response seems to show that students and teachers operate under very different evaluative sets. Whether it is due to age, gender, experience, expertise, social position (Evans, 1997), or classroom dynamics (O'Neill & Fife, 1999), students and teachers tend to consume writing quite differently. They have trouble speaking the same language of response because their responses to the writing itself are so far apart. Students tend to read to comprehend, teachers to judge (Gere, 1977). Students interpret detail realistically, teachers emblematically and literarily (Hull & Rose, 1990). Students prefer an orally produced style, teachers a professionally written style (Means, Sonnenschein & Baker, 1980). Students think of writing as a maze or an obligation over which they have no control, teachers as an activity creatively in the hands of the writer (Tobin, 1989). Students look on writing options as right or wrong or else an endless shelf of choices with no way to choose, teachers as rhetorical choices leading to the best strategy (Anson, 1989). Students take a first interpretation as the only one, teachers as a first position to be revised upon consideration of other interpretations (Earthman, 1992). Students place the most importance on vocabulary, teachers on substance (Yorio, 1989). In his study of student and English teacher reading predilections, Newkirk (1984) observed that students preferred the obvious and familiar, teachers the unusual and new; students overt meanings, teachers unstated; students explicit organization with headings, teachers implicit organization; students a bland tone, teachers an emotionally enhanced tone; students a high register, teachers a middle register.

If students and teachers conflict so much in preference for style and attitude toward the author's task, then we may have a partial reason for another discouraging set of research findings. Little consistent association between writing improvement and volume or kind of response has been documented. It seems that the time-honored effort of inscribing commentary on student papers is sometimes honored with a proven pay-off and sometimes not, at least within the time span of the course. In a characteristic pre-post study, Marzano & Arthur (1977) compared the effect of three kinds of commentary, one that only marks error, one that only suggests revision, and one that only fosters critical-thinking. They found minimal effect on the students' writing with all three types (for somewhat dated reviews of such studies, see Knoblauch & Brannon, 1981; Fearing, 1980). The type of response that holds the best record seems to be praise, as opposed to commentary that finds only things to correct (e.g., Denman, 1975), but even that record is inconsistent, and students rightfully are leery of praise when it is general and gives little direction. All this is no reason to return to the practice, universally condemned by most teachers, of restricting response to a grade, or worse of hoping that sheer volume of writing without any teacher feedback will improve student skills and not merely ingrain bad habits (the negative research findings on that hope are reviewed by Sherwin, 1969, pp. 156-167).

Obviously the circuit of response connects consumption with a new node, production, asking if problems with student use of commentary couldn't be solved with teachers spending more time producing it. Some modes of response must be more effective than other modes. But the past erose research record on effectiveness does help explain a history of teacher restlessness in response practices, usually reflecting a search for methods that will work better with the student and save the teacher time. There have been historical upswings, for instance, toward audiotaped response, one-on-one conferencing, hypertext commenting, and peer evaluation. The last has been and remains especially popular, in part because it helps reduce the paper load for teachers (see below), but also because it seems a way to translate critique into a language style and preference set that students can understand, e.g., from their own peers (Spear, 1988). But even with peer-response methods, disillusionment has been setting in, though perhaps less with teachers than with students who are sick of so much of it.

Students, after all, are the main consumers of instructional response to writing. Not unreasonably they want their money's worth. Most students yearn for teacher commentary, even when they don't make the best use of it. In an old but well controlled semester study (Stiff, 1967), experimental students found their papers with marginal comments only, or with end comments only, or with both marginal and end comments, and although the writing of the three groups were not significantly different at the end of the semester, the first two groups complained that they had been neglected. But can teacher shortcutting avoid student short-shrifting? All across campus students write papers, and they all expect a reaction beyond a grade or a number. The question is how can teachers best produce it.

Production, the Bane of Response

The conventional wisdom is that formative response to student writing—not just corrected and graded but with recommendations for improvement—is produced by teachers only with intense labor. Teachers feel this to the bone, and although deans seem to ask for new proof of this labor nearly every biennium, teachers can show that it has long been documented. As early as 1913, in a summary of reports from 700 teachers, the Modern Language Association calculated that the average college teacher spent 5.2 minutes per page, if the writing was "carefully criticized" (6). In 1955, based on the reports of 600 teachers, Dusel calculated 7.7 minutes a page. In 1965 Freyman calculated that "lay readers"—ancillary help for overworked high-school teachers—could do a full reading at 8.1 minutes a page. The increase in response time may be a shift toward more words per page (hand-written giving way to type-written), or of writing commentary from marking only for surface mistakes toward commenting on more substantive points, which takes much longer. Student papers also have grown longer. Before the 1960s, the model was the "500-word theme," or less than two typed pages. Today first-year college essays (not counting the full-blown "research paper") probably average over four pages. Connors and Lunsford (1988) cite their own and others' research indicating an average paper length of 162 words in 1917, 231 words in 1930, and 422 words in 1986—and Andrea Lunsford (personal communication) says that paper length now averages more than a thousand words! Currently the National Council of Teachers of English assumes a minimum response time of 20 minutes per paper (2002). We are talking about an huge expenditure of teacher effort. At a conservative 4 pages per essay, 7 minutes per page, and 25 students, the English or rhetoric department composition teacher is spending between eleven to twelve hours—pure labor, no breaks—bent over an initial response to just one set of papers. That leaves out of the total the time devoted to second and third drafts.

Long hours marking papers is the mark of the composition teacher—the profession's mark of Cain, some would say. It is no wonder otherwise gentle colleagues in English departments will fight tooth and nail to keep out of the writing classroom. Nor is it any surprise to find peppered throughout composition bibliographies titles such as "When It's One Down and 1,249 Themes To Go," "Avoiding Martyrdom in Teaching Writing," "Minimal Marking," "Plus, I'm Tired," "Staying Alive," and (my favorite) "Practical Proposal to Take the Drudgery Out of the Teaching of Freshman Composition and to Restore to the Teacher His Pristine Pleasure in Teaching." Of the many volumes in the National Council of Teachers of English series Classroom Practices in Teaching English, by far the oldest still in print is called "How to Handle the Paper Load" (Stanford, 1979) -- and as I write NCTE has announced a companion volume to it in the works. Both volumes will be of interest, of course, to many teachers outside of English departments, who assign writing in classes with enrollments in the hundreds.

No wonder that new ways to handle the paper load, advances in efficiency in the production of response, have a long history in the teaching of college composition. The use of lay readers (called "reading assistants" at Vassar before they were phased out in 1908) may be one of the earliest, but it was only a harbinger. Here is a short list of shortcuts, with date of earliest record I can find in the post-WWII literature.

Mark only the presence of problem, leaving it up to the student to locate and correct it (1940)

Use a projector to respond to student writing in class (1942)

Use a checklist, or rubberstamped scale of criteria (1950)

Hold one-on-one conferences to respond (1946)

Have fellow students read and respond to papers (1950)

Hold one-on-two or one-on-three conferences to respond (1956)

Record comments on audiotape (1958)

Respond only to praiseworthy accomplishments (1964)

Have students evaluate their own essays (1964)

Respond only to a limited number of criteria (1965)

Have students use computerized grammar, spelling, or style checkers (1981)

Add comments to the student's digital text with word-processing footnotes or hypertext frames (1983)

The last two items elevate us into electronic space, with promise of a quantum leap in efficiency. The idea that computer programs might be devised to do the work of humans in evaluating student writing was proposed in the 1950s (e.g., Wonnberger, 1955) and first effected in the late 1960s—an enterprise that ran about a decade behind machine translation and text-corpus analysis for linguistic and literary purposes. In 1968, Page & Paulus described a program with 30 machine-scorable, statistically regressed measures that achieved .63 correlation on ratings of student essays by humans, as good as the human raters themselves could intercorrelate. With advances in computational algorithms, measuring semantic and topical structures as well as lexical and grammatical ones, businesses dealing in computerized essay evaluation currently announce much higher correlations. Currently the College Board's WritePlacer, the Educational Testing Service's e-rater, and ACT's e-Write have already been used to admit undergraduates and place them into writing courses. Feedback programs such as ETS's Criterion and Vantage's MY Access! can analyze classroom writing in lieu of the teacher. Competing programs are cropping up like Deucalion's soldiers. The profit motive is irresistible, of course, and the sales are going to increase. So it is important to understand why, in the mind and response practice of most English teachers today, the role of e-valuation of student writing is largely inconsequential.

Historically, it did not begin that way. Although Page and Paulus were financed by the College Entrance Examination Board, their success was represented to the writing-teacher world as a way to reduce the paper load, "a proper program for correction of essays," helping reduce the "tremendous amounts of time out of class" teachers have to spend doing "an ideal job in essay analysis," "equalizing the load of the English teacher with his colleagues in other subjects" (p. 3). Their report even ends with a program, devised by Dieter Paulus, showing how the machine can "make comments about a student's essay" and do so "in a real school situation" (p. 150). In another early promotion of computer grading, Slotnick and Knapp (1971) draw a proto-computer-lab scenario for teachers "burdened with those ubiquitous sets of themes waiting to be graded" (1971, p. 75). In the laboratory of the future, students would type their first drafts on a special typewriter and a clerk would feed the typescript through a "character reader" (i.e., scanner); that night the machine grader would encode the tape and print out responses; next day the student would have commentary to use to revise for a second draft. Until then, the teacher hasn't seen the paper. A fantasy world in 1971—suitable scanners were some years away. But the future didn't happen, at least not quite yet. Today, with all the equipment in place and universities often willing to purchase the software, composition teachers rarely use it in this way, though teachers in other departments sometime do not show the same reluctance.

The reason has to do with production. In the activity of reading and diagnosing student writing for instructional purposes, to progress toward machine scoring is to regress back to the single-grade mark on a paper. Despite multiple-regression or semantic-map analysis of student texts, the computer program ends up with a single digit response: the paper is a 3, or a 5. (This is one reason why the computer can be programmed to correlate so well with testing-firm human raters, who also are also reducing an essay to a single-digit score as fast as they can; see Haswell, 2006.) Machines can evaluate a text but they cannot diagnose an author, cannot help lead the writer to better writing. Of course machines can report the outcomes of their subroutines—average word-length of sentences, or percent of words that are among the 1,500 most often appearing in print. But the relation of such variables with writing growth is notoriously complex, nor do such variables count for much in the typical writing teacher's repertoire of criteria relevant to student writing improvement. Of the 34 outcomes listed by the WPA (2000) for first-year writing, none, as yet, can be machine scored. And of course even though home-computer software will now do grammar and spelling checks, neither does so with anywhere near the accuracy of the average writing teacher (Haswell, 2005). But in most comp teacher's criterial top ten, grammar and spelling fall at or near one. As for the computer's programmed Pollyanna diagnostic advice, which hasn't changed much since Paulus's first effort, even students laugh:

YOU USED 5 PARAGRAPHS WHY SO MANY Q TRY TO REORGANIZE THE ESSAY SO THAT YOU DON'T USE SO MANY PARAGRAPHS USUALLY A PARAGRAPH SHOULD CONSIST OF SEVERAL SENTENCES . . . . I ENJOYED READING YOUR ESSAY (Page & Paulus, 1968, p. 159)

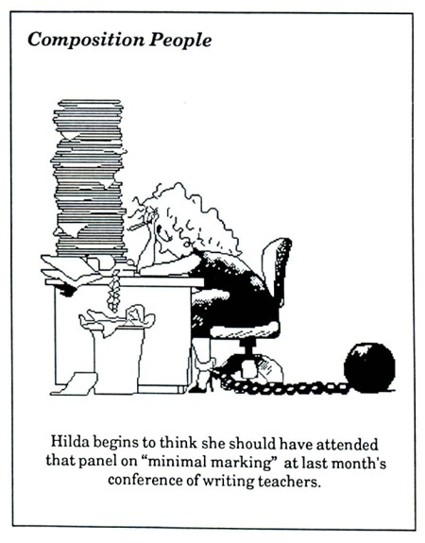

If machines, though, could produce even part of a writing teacher's true response, truly instructional, many writing teachers would be tempted to buy into them (Brent & Townsend, 2006). It would be a complex love-hate relationship, and most intense with teachers conducting straight composition courses. Response to writing lies at the essence of what they do, takes time to do well, yet takes up so much of their time. On a weekly and sometimes daily basis, it takes away energy they want to devote to the rest of their instruction—preparing instructive assignments, orchestrating class activities, deepening connections between writing and cultural and social ends. Content-course faculty who assign extended writing feel the same conflict, though their critical reading habits and sites may differ (Walvoord & McCarthy, 1991). Their way with students words is understudied, but what we have shows production a major if sometimes tacit factor. Swilky (1991) worked with a philosophy teacher in a WAC faculty seminar who talked about his teaching only after the seminar was over, finally confessing to her that he had not incorporated writing-to-learn methods in his classes because he thought they would increase his workload. Issues of response production may be worse for English composition faculty because their response activities are often represented publicly in a contradictory way that they find demeaning but half true: lowly comp teachers chained at home for the weekend stooped over a stacks of student essays (Figure 2). Meanwhile in the background they can hear the promotional literature from the software companies offering the greatest writing-teacher shortcut of all time, computerized "diagnostic" feedback on student essays "in minutes," "immediately," "in an instant" (Rothermel, 2006).

Figure 2: From Composition Chronicle: Newsletter for Writing Teachers 8

(3), April 1995, p. 11.

Representation and the Sites and Acts of Response

Responding to student papers at home over the week-end! How depressing. Indeed, where and how response takes places makes a difference. Superficially, it may make a difference in terms of productivity. Some teachers need their special armchair, their familiar mug of tea, and their favorite pen before they can start commenting on a stack of papers—pre-discourse rituals little different than the ones they need before they compose papers of their own. Other teachers, such as the one I once saw marking papers in a grocery-store checkout line, seem free of location constraints. More profoundly, though, and certainly more complexly, teachers are never free of site and site is never disconnected from the way teachers are represented socially and culturally.

I'm arguing that the role of the responding teacher and the setting of the act of response combine to represent the teacher-responder in a way that may have complicated and powerful effects on students, as well as on others. Three decades ago Stewart (1975) made this point in a provocative essay contending that teachers should present themselves as aesthetically distanced, as people of "sensibility" who can remove themselves from the accidental qualities of a student's work and attend to the rhetorical essences of it, and to do this it helps to read papers in the right setting. (One ideal setting, Stewart writes bitterly, would be a home "in which you could work undisturbed by the pressing needs of families which suffer because you are underpaid," p. 241). Stewart's image of the teacher as a person of "sensibility" has a quaint ring to it, but the need for undisturbed space is contemporary enough. He gives us just the first of several role/site representations with which teachers who work with student writing have often identified themselves. With each, I invent a characteristic scenario, though not an unrealistic one.

- Distanced aesthetician or rhetorician (Stewart, 1975). The teacher comments on the work of a student in another teacher's class, on a signed form to be returned to the student, as part of a cooperative teaching project.

- Involved co-creator (Lauer, 1989). The teacher and a student work together to write a piece, sometimes over coffee, sometimes through e-mail exchanges.

- Demanding coach (Holaday, 1997). A writing-center tutor advises the student on a second draft for the student's teacher; or the teacher helps prepare students for a department exit portfolio reading by discussing departmental standards and evaluation habits of the other teacher-evaluators.

- Persuasive motivator (Johnston, 1982). In a workshop session the teacher provides a set of questions designed to prompt students into further ideas on a draft, handing the heuristic out to small groups of students. Each group is collaborating on a piece. The teacher then visits groups, helping solve problems.

- Experienced modeler (Young, 1997). In class the teacher projects a passage from a student essay on screen and re-writes it; or in an end-comment to a paper requests that the writer read a particular essay for ideas before starting the second draft.

- Prompting dialoguer (Lauer, 1989). In the teacher's office the teacher meets with a group of two or three students, who have no more than pre-writing notes, and the teacher encourages questioning and inquiry.

- Judicious lawgiver (Murray, 1982b). When assigning a new essay, the teacher makes available a student-written essay that fulfills the assignment at the C level, and explains why it would earn that grade.

- Supportive parent (Turbill, 1994). The teacher privately praises the student's lab report after class in the hallway.

- Expert reader (White, 1984). In class the teacher shows how a reading assignment, a professionally written piece, achieves rhetorical effects germane to the students' next writing assignment.

- Sharp-eyed editor (Dohrer, 1991). During a workshopping session in a computer classroom, the teacher vets, one last time, each student's letter to the editor, just before it is e-mailed off.

- Experienced diagnostician (Krest, 1988). Students know that their paper will be read and commented on by other students in the class, during a "read-around." The students record their comment on one margin, the teacher on the other margin, and then the teacher and student conference, one on one, over the comments.

- Real reader (Maimon, 1979). To the class the teacher reads unrehearsed through a student's first draft, thinking aloud, or more exactly responding aloud, all along the way.

Note that the last four roles portray the teacher basically as a reader. On the one hand, the act of reading is so private that it hardly seems a public role at all. On the other hand, there is no discursive practice that more distinguishes the activity of teaching writing within a campus faculty. Yet reading—the necessary first step in response to writing—is no less complex than the commentary that follows. Functionally, we read in many different ways, and each way has its public face. Think again about written response on a student's paper. The commentary is the teacher's representation, and different kinds of remarks will represent the teacher in different roles. The teacher can read setting the student's language against usage, striking out solecisms and replacing them with conventional expressions, in which case the teacher will appear as grammarian or guardian of the language. Or the teacher can read with an metadiscursive eye to effects the writing will have on other readers, circle whole passages and write "Delete—everyone knows this!" or "Especially persuasive" in the margin, in which case the teacher will appear as experienced author or editor-in-chief. Or the teacher can read with a memory of the student's previous writing, start an end-note with the comment, "Finally—a breakthrough," in which case the teacher will appear in the role of caring physician or observant coach. And if teachers leave no mark except for a grade (as in Stiff's 1967 experiment), students may image them as distanced and uncaring dispensers of the law.

This dramatis personae of teacherly roles may sound as though teachers, when they act as responders to student writing, are just so many authors in search of a character. But one represents one's self willy-nilly, and a teacher can and does switch to other roles in the blink of an eye, perhaps to that of content expert setting some subject matter forth to students as the scholar-of-the-lecture-hall or the director-of-the-research-lab. The point is that writing-responder roles alone are subtle, unstated, multiplex, and numerous, and they can't but mediate the teacher's commentary to the student. In interviews with students, O'Neill & Fife (1999) found that they constantly interpreted teacher comments through their construction of teacher personalities and roles: "‘She's more like friend than a teacher'" (41). Gordon Harvey (2003), in a piece published after I had compiled the above list of responder roles, offers his own list, including "introducer" (to academic ways), "midwife-therapist," "representative of an institution," "one's actual self," and other roles not identical to ones on my list. His point is that choosing and maintaining these roles adds to the stressful job of responding to student writing, a job finally that is "impossible" given the numbers of students and papers, a job that leads to teacher discouragement and burnout. That is not my conclusion, but it does challenge the hope that out of this problematic multiplicity of role, task, and site representations effective and sustainable methods of response can be extracted.

Identity and Resistance, the Major Roadblock to Response

This is not to say, of course, that both writing students and writing teachers can't resist these representations. They often do. Resistance, in fact, may be a constant factor in instructional response to writing. And it is more than the obvious fact that psychologically students often resist teachers' advice, or teachers often resist commenting on papers (who hasn't procrastinated on that job?). Resistance is part of the human response to representation itself. How else can a person escape the suffocating anonymity of public representations except by resisting them? How else respond to representation except by developing a counter-representation that images oneself, even if only in one's head, as somehow different, somehow not representative? That activating image of oneself (or one's self), identity, brings our circuit of cultural response practices back around to regulation, for identity can also be defined as the image we use to regulate our own practices in resistance to the cultural practices foisted upon us.

However all this may be, resistance inheres in response to writing (Paine, 1999; Chase, 1988). Students are reluctant to change their rhetorically inept ways because their old ways have stood them academically in sufficient stead (second-language specialists call this "error resistance"), and because they don't like the image of themselves as inepts. Room for disbelief is especially large in straight composition classes, and an argument for WID. It's easier for the student to shrug off the comment "These last two arguments are far too simplistic to be convincing" as just the English teacher's opinion than the history teacher's comment "You've listed only two of the five main political outcomes of the Great Depression." Students resist the marginal question because it's not an answer (Smith, 1989), the corrective commentary because it's depressing (Reed & Burton, 1986), the pithy teacher-efficient mark because it lacks explanation (Land & Evans, 1987), the lengthy end-comment because it seems too critical and generalized (Hillocks, 1982), the recommendation for improvement too removed from their current habits because it seems too risky (Onore, 1989), the critique from peers because it comes from novices (Odell, 1989), the critique from teachers because it comes from an instructor they sense holds a marginal or tenuous academic position (Whichard, et al., 1992). They resist not only for such questionable reasons but also for a good one, because they are more and more seeing themselves as independent and ultimately free to use language as they wish. They resist because they are students. As William Perry put it in a very early piece on resistance and response (two decades before publishing the book for which he is famous, Forms of Intellectual and Ethical Development), "the young will resist even your efforts to reduce their resistance" (1953, p. 459).

That doesn't make response any easier for teachers. The self-contradictions in the above panoply of student resistance just encourages teachers to identify themselves, among other things, as skeptics. To student papers teachers have to bring their own resistance. Perhaps the least defensible reason for it is the assumption that student writing is abnormal, apprenticeship work, and therefore worthy of skepticism, at least a kind of skepticism teachers don't bring to real-world writing (Lawson & Ryan, 1989). The most defensible reason for their resistance is the thought that the effort they are about to spend as a teacher-responder may well not be worth the effect it will have on the writer. It is a sad reason that either begs for response shortcuts or pleads to bag the whole operation.

Some Directions to Shortcuts

We are back, full circuit, to the response shortcuts I mentioned at the beginning: checksheets, correction symbols, mnemonic acronyms, weighted criteria scales, peer-critique handouts, computer feedback software, and many more. Do they really produce more effect with less effort? Can they be revised to do so? As I said, my intention with this paper is not to offer new and better ones, an enterprise which would require a paper at least as long the current one, but to suggest some directions for review, revamp, or creation. The activity nodes in Du Gay's "circuit of culture" will help map these suggestions.

Regulation Research clearly documents that for students writing criteria, genre and mode rules, disciplinary styles, and standards are heavily mediated through the particular academic course. It is a mediation that changes the critical terms and adds immeasurably to their multiplicity, in practically every classroom they enter. Teacher feedback can largely be a waste of time, for both teacher and student, unless the critical language is grounded in the specific rhetoric of the field under study. The caution applies to every kind of shortcut, assignment desiderata ("Be sure your paper is well organized"), marginal comments ("Be less wordy here"), and analytic scales ("Content: 15 out of 20 points"). Explaining discipline-specific terminology sounds like more work for teachers, but a handout early in the course will save much time later. For genre traits, teachers can provide a model paper, with important aspects marked and explained. Selection is another route to pursue, although it goes against the cover-all philosophy of many academic responders. Teachers can choose a few of the most important and most accessible characteristics of disciplinary style and prepare a sheet explaining and illustrating them. Let the rest go. The same principles of showing and selecting apply to paper commentary. If a paper is wordy, the teacher can rewrite one sentence, showing how it is done, and ask the student to redo the entire paper. The redo option also makes sense in dealing with standards. Student can be told that papers below a certain level of writing will just be handed back without marks, to be resubmitted within one week. Setting exact critical levels ("More than five misspellings and the paper will receive a redo") seems a denial of the contextuality of error gravity, although fair warning of errors that score high on the teacher's irritation scale will save everybody time. For years my practice has been to stop reading and marking as soon as I sense a paper that is below a reasonable level, and to draw a line across the page and write "Redo" at that point. Papers usually come back substantially improved with no more effort on my part.

Consumption clearly is heavily interdependent on regulation and production. The communication gap between teacher response and student understanding of it may be the most difficult of all these interactions to shortcut. But avenues toward efficiency are there. Attention to students and how they are receiving methods of teacher response requires extra time at first but may save time in the long run. Illustrative is a method developed by Hayes, Simpson, & Stahl (1994) by which students on their own can prepare for essay exams in content courses. An acronym helps make the system accessible for students, PORPE: Predict potential essay questions, Organize key ideas to answers using your own terms and structures, Rehearse key ideas, Practice by writing trial essays, Evaluate the essays in terms of "completeness, accuracy, and appropriateness." The system certainly saves the teacher time since all the work is done by the student and performance on the actual examination essays may need less teacher response. It is a good example of a universal labor-cutting technique more teachers could avail themselves of, in which shrewd preparation of students for a writing assignment saves the teacher time in responding to it. But if the teacher does not keep an eye on the process, it can well turn out counter-productive. With PORPE, how good are the students' self-chosen terms and structures? How well can they judge their own writing, especially with critical language as discipline-tailored as "accuracy" and "appropriateness"? In regards the communication channel between teacher and student, in the long run the best shortcut is to make sure critical terms and response methods are interpretable, whether they comprise test preparation, assignment direction, composing heuristic, self- and peer-evaluation, to-do checklist, paper commentary, grade breakdown. Even the most obvious term may be misinterpreted. Leon Coburn tells a story of the time he kept writing "cliché" in the margin while the student kept producing more and more clichés. Finally Coburn asked what was going on, and the puzzled student responded, "‘I thought you kept marking them because you liked them'" (Haswell & Lu, 2000, 40). Amusing, but the story assumes a parable-like shape when we consider that students often prefer clichés and frequently don't even recognize them as such (Olson, 1982).

Production If regulation lies at the center of the purpose of academic writing response, production lies at the center of its method. Consequently production prompts and guides most teacher shortcuts. To the formal ones listed above (praise only, peer-evaluation, self-evaluation, audiotaping of comments, etc.), every teacher has added their own: assign fewer papers, respond only to the worst papers and OK the others, comment on only one aspect of the paper (as the assignment forewarns), assign only short papers that demand concise writing, talk with the student over a batch or portfolio of papers in an office conference, comment only if the student asks for comments, read papers only if a student brings one in complaining about the commentary or the grade given by a teaching assistant—an endless list. Some are once-only, spur of the moment, and ethically questionable, like the time a graduate friend of mine, a teaching assistant in political science, told his class he had gone hunting and forgotten that he had put their papers in the trunk of his car and he had to throw them away because they got covered with quail blood; or the time an English teacher (who understandably prefers to remain anonymous) got desperate for time, gave a set of papers unread back to her students, and told them they were the worst essays she had ever read and that they were to re-write them, replacing the next assigned paper (Haswell & Lu, 2000, 51). The teacher said that the re-written papers turned out to be the best she received all semester, but if other teachers now seize what appears to be an amazing shortcut—much student improvement, zero teacher time—again I recommend they appraise the results carefully. A major hazard of production shortcuts is that they are constructed almost solely for the teacher and usually judged solely by the teacher. As with any workplace production, the situations begs for quality control. The ultimate issue is how well the methods work, gain of student learning set against gain of teacher time. As in the marketplace, popularity of a product is no guarantee of its worth, and research into writing response questions some of the most widespread of production shortcuts. Peer-evaluation, for instance, can generate superficial revisions (Dochy, Segers, & Sluijsmans, 1999), and automated grammar-checkers and non-automated English teachers identify the same mistakes only 10% of the time (Brock, 1995). On the other hand, labor-intensive response methods do not necessarily equate with learning gains—witness the hours teachers have devoted to time-consuming correction of every mistake in student papers with, it seems, only minor influence on subsequent student writing.

Representation Does the image itself of a teacher swotting over student writings help improve student writing? For students does that image serve as a model, a goad, a caution, a laughing stock? As activity theory posits, based upon the way teachers present themselves students will construe them and act upon that construction. The advice then is to know that, among the many roles of responder to student writing (distanced aesthetician, involved co-creator, demanding coach, etc.) there is a choice and some roles are more efficient and efficacious than others. With a doable class size of twenty-five students, occasionally I have had students bring their newly written paper to me for a ten-minute office conference. I simply start reading the paper, voicing my reactions as I go. The student can see me read, rather than operate from some fanciful image of me as a reader. I find things I like, wonder aloud where the piece is going, hesitate over unclear sentences, and discover what qualities of writing the student should be working on next. Usually I don't have time to finish the paper, but students rarely mind, and I imagine I get more useful critique accomplished in ten minutes than in the twenty minutes it normally takes me to comment in writing on a five-page student essay. That, I think, also leaves the student with an image of me as a careful and engaged reader, which in turn encourages them to write better papers for me later. At least students have praised the method in their course evaluations.

Representations can go wrong, of course. Take my commenting technique of minimal marking (Haswell, 1983, though not entirely mine, since earlier versions go back at least another quarter century, e.g., Halvorson, 1940). There are two steps to it. The first has the teacher put only an X in the margin next to a line with any surface mistake in it. Students have to discover the mistake and correct it on their own, which places the task of learning where it belongs, on the student. The second step is for students to have their emendations vetted by the teacher. Although they catch around two-thirds of their original errors, often (39% of the time in my first-year writing classes) they correct mistakes incorrectly, correct non-mistakes, or simply can't find the error. Unfortunately the second step subtracts some of the time teachers gain with the first step, and teachers I have known have omitted it—a major mistake, I think, not only because important student learning takes place in the second step but also because neglecting it represents the teacher as lazy, not caring, perhaps a little cruel.

Identity / resistance. Or unprofessional. Once a colleague about twenty years senior came into my office, beefy red in the face, thrusting a student paper at me. It turned out to be one a student of mine had written and I had responded to. Among my marginal comments were X's of my minimal marking system. The student had not yet tried to find and correct the errors, or I obviously to vet her corrections, but that made no difference to my colleague. He told me I had abjured my duty as an English teacher to correct student writing and that I had better correct my ways. Identity is the representation one constructs of oneself, in part out of the representations others make of oneself. Professionally, it is the self one has to live with, on and in the job. In this case I chose to resist my colleague's notion of myself, and carried on with a response technique I found cheap, effective, and satisfying. When it comes to one's identity as a responder to student writing, the research offers little direction, except the self-evident one to avoid self-destructive identities—Miss Grundy, never satisfied, or Bartleby the Scrivener, burnt out to the point of inaction. I suppose the main caution is how easily teachers can forget that the end is students learning and that if productive response to their writing, despite all the shortcuts we can contrive, still is laborious, well, that is what we are paid to do.

There is one hard-earned identity, however, that some teachers build for themselves and that I can especially recommend. It is based on an understanding of the very complexity of response I have been describing, and on the pragmatic knowledge of response practices that are both sustainable and functional, sustainable because they are efficient for the teacher and functional because they are lucid and focused enough for students to put into use. This responder identity might be described as craft wise. Like experienced fixers in all trades, craft wise responders have learned to home in on the main problem and attend to it with as little effort as possible.

Their wisdom boils down to eschewing the traditional cover-all-bases approach to writing response and adopting a smaller task-specific, problem-specific, and learner-specific method. The techniques and advice have been around for a long time. Know your student and limit your response, especially your advice for further work, to no more than one or two things your student can do. It is response easy in the making but not easy to make. It requires sifting through the options and contradictions of regulation, navigating the constraints of consumption, weighing the cost of production, critiquing the masks of representation, and working with and not against student and teacher identities. It is no surprise that the advice often emerges from faculty who have intensively studied student response to teacher response: Diogenes, Roen, and Moneyhun (1986) and their work with English instructors; MacAllister (1982), Mallonee and Breihan (1985), and Lunsford (1997) and their work with campus faculty; Zak (1990) and her conversations with students over her own comments; above all Harris (1979) and her years of experience conferencing with writing-center students of other teachers. Long of birth, the advice emerges from a teacher-identity again born of resistance—but in this case a resistance to the stubbornly rooted professional position of full correction. Yet it is advice that will meet another resistance, the student's, whose own development so often prefers the safe and known and is therefore reluctant to pull the one switch that might further that development. Clear in its directions, easy to consume for the student, easy to produce for the teacher, this particular response practice seems to have everything going for it. But it doesn't come with guarantees.

The Ever-Frustrating, Perpetually Fascinating Issue of Writing Response

With instructional response to writing, it seems, skepticism rarely fails to play a part. The poet William Stafford once told me (it was, in fact, on that lonely, twisty highway between Lewiston, Idaho and Pullman, Washington) that his job as a teacher responding to student writing often consisted of no more than "leaving tracks"—a trail of marginal dots and checks and squiggles—which at least showed the writers that he had cared enough to read and comprehend. Maybe he was right, and the final worth of writing-teacher commentary is only a kind of passing the torch, keeping the students assured that they will always have words people will read. But skepticism itself can't last. Don't we all remember a teacher's comment on our writing—voiced or inscribed—that pulled some sort of switch, that rankled forever or precipitated a critical moment changing our writing forever? So teachers carry on. As regards truly instructional response, it seems teachers will find themselves nowhere else but on the road of excess, always making the circuit from certainty to complexity to skepticism and round again.

Wherever they are on that road, however, there is always a via media. Complexity may tempt the teacher to self-serving simplicity, but it is worth remembering that in an important sense every act of evaluation of student writing involves simplification. Grades (plus and minus) sort into no more than twelve categories, "Good, clear examples" expresses the comprehension of only one reader, "Your ending is flat" omits mention of scores of other writing accomplishments and traits. With the peculiar and situation-specific act of evaluation we call "response," simplification, of course, can serve for good or bad. I've never forgotten my sense of indignation when at the end of a sophomore history course I picked up four assigned book reviews I had written and discovered only a √ at the top of each first page. Nor have I forgotten the look on my student's face—he was in the first class of sophomores I ever taught—and the loss-of-face sound in his voice when he picked up his paper, saw my fulsome comments, and said, "You wrote more than I did." With response to student writing, it's uplifting to know that less can sometimes be better and that simplification is unavoidable. It is also enlightening to know that shortcuts can turn into culs-de-sac. In the final analysis, the best route for teachers is to keep checking the response to their response and, of what is workable, to go with what they know works.

A Bibliographic Post Scriptum

What researchers know works in instructional response is considerable, much more than I've been able to cover. On evaluation of writing within disciplines other than composition, there are three fine collections of essays, Yancey & Huot (1997), Sorcinelli & Elbow (1997), and Strenski (1986). Anson, Schwiebert & Williamson (1993) is an extensive annotated bibliography of writing across the curriculum, where practical writing-response techniques can be found devised by teachers in accounting, biology, business, computer science, economics, engineering, history, literature, mathematics, nursing, philosophy, psychology, and elsewhere. Speck (1998), an invaluable bibliography of writing evaluation, has over 1,000 entries, solidly annotated. Recently Underwood and Tregidgo (2006) review 21 research articles, 14 of them at the college level, looking much as I have for what is effective. The research database is much more extensive than they suggest, however. The online index of post-secondary scholarship into writing, CompPile (Haswell & Blalock), good for 1939-1999, currently locates around 420 data-gathering studies involving teacher response.

References

Anson, Chris M. (1989). Response styles and ways of knowing. In Anson, Chris M. (Ed.), Writing and response: Theory, practice, and research (pp. 332-366). Urbana, IL: National Council of Teachers of English.

Anson, Chris M. (Ed.). Writing and response: Theory, practice, and research. Urbana, IL: National Council of Teachers of English.

Anson, Chris M., Schwiebert, John E., & Williamson, Michael M. (1993). Writing across the curriculum: An annotated bibliography. Westport, CN: Greenwood Press.

Bazerman, Charles. (1989). Reading student texts: Proteus grabbing Proteus. In Lawson, Bruce, Ryan, Susan S., & Winterowd, W. Ross (Eds.), Encountering student texts: Interpretive issues in reading student writing (pp. 139-146). Urbana, IL: National Council of Teachers of English.

Beason, Larry. (1993). Feedback and revision in writing across the curriculum classes. Research in the Teaching of English, 27(4), 395-422.

Brent, Edward, and Townsend, Martha. (2006). Automated essay-grading in the sociology classroom: Finding common ground. In Ericsson, Patricia Freitag, & Haswell, Richard (Eds.), Machine scoring of student essays: Truth and consequences (pp. 117-198). Logan, UT: Utah State University Press.

Broad, Broad. (2003). What we really value: Beyond rubrics in teaching and assessing writing. Logan, UT: Utah State University Press.

Brock, Mark N. (1995). Computerized text analysis: Roots and research. Computer Assisted Language Learning, 8(2-3), 227-258.

Chase, Geoffrey. (1988). Accommodation, resistance and the politics of student writing. College Composition and Communication, 39(1), 13-22.

Connors, Robert J., & Lunsford, Andrea A. (1988). Frequency of formal errors in current college writing, or Ma and Pa Kettle do research. College Composition and Communication, 39(4), 395-409.

Cross, Geoffrey, and Wills, Katherine. (2001). Using Bloom to bridge the WAC/WID divide. ERIC Document Reproduction Service, ED 464 337.

Denman, Mary E. (1975). I got this here hang-up: Non-cognitive processes for facilitating writing. College Composition and Communication, 26(3), 305-309.

Diogenes, Marvin, Roen, Duane H., & Moneyhun, Clyde. (1986). Transactional evaluation: The right question at the right time. Journal of Teaching Writing, 5(1), 59-70.

Dochy, Filip, Segers, Mien, & Shuijsmans, Dominique. (1999). The use of self-, peer and co-assessment in higher education: A review. Studies in Higher Education, 24(3), 331-350.

Dohrer, Gary. (1991). Do teachers' comments on students' papers help?. College Teaching, 39(2), 48-54.

Du Gay, Paul (Ed.). (1997). Doing cultural studies: The story of the Sony Walkman. London: Sages; The Open University.

Dulek, Ron, & Shelby, Annette. (1981). Varying evaluative criteria: A factor in differential grading. Journal of Business Communiation, 181(2), 41-50.

Dusel, William J. (1955). Determining an efficient teaching load in English. Illinois English Bulletin (October), 1-19.

Earthman, Elise A. (1992). Creating the virtual work: Readers' processes in understanding literary texts. Research in the Teaching of English, 26(4), 351-384.

Eblen, Charlene. (1983). Writing across-the-curriculum: A survey of a university faculty's views and classroom practices. Research in the Teaching of Writing, 17(4), 343-348.

Elbow, Peter. (1993). Ranking, evaluating, and liking: Sorting out three forms of judging. College English, 55(2), 187-206.

Evans, Kathryn. (2003). Accounting for conflicting mental models of communication in student-teacher interaction: An activity theory analysis. In Charles Bazerman & David R. Russell (Eds.), Writing selves / writing societies: Research from activity perspectives. Fort Collins, CO: WAC Clearinghouse, https://wac.colostate.edu/books/selves_societies/.

Evans, Kathryn A. (1997). Writing, response, and contexts of production or, why it just wouldn't work to write about those bratty kids. Language and Learning Across the Disciplines, 2(1), 5-20.

Fearing, Bertie E. (1980). When it's one down and 1,249 themes to go, it's time to establish a realistic teaching load for English teachers. ADE Bulletin (no. 63), 26-32.

Ferris, Dana R. (1995). Student reactions to teacher response in multiple-draft composition classrooms. TESOL Quarterly, 29(1), 33-53.

Freyman, Leonard. (1965). Appendix: Marking of 250-word themes. In Arno Jewett & Charles E. Bish (Eds.), Improving English composition: NEA-Dean Langmuir Project on Improving English Composition (p. 99). Washington, D. C.: National Education Association.

Gere, Anne Ruggles. (1977). Writing and writing. English Journal, 66(8), 60-64.

Gottschalk, Katherine K. (2003). The ecology of response to student essays. ADE Bulletin (no. 134-135), 49-56.

Grabe, William, & Kaplan, Robert B. (1996). Theory and practice of writing: An applied linguistic perspective. London; New York: Longman.

Hairston, Maxine. (1981). Not all errors are created equal: Nonacademic readers in the professions respond to lapses in usage. College English, 43(8), 794-806.

Halpern, Sheldon, Spreitzer, Elmer, & Givens, Stuart. (1978). Who can evaluate writing? College Composition and Communication, 29(4), 396-397.

Halvorson, Nelius O. (1940). Two methods of indicating errors in themes. College English, 2(3), 277-279.

Harris, Muriel. (1979). The overgraded paper: Another case of more is less. In Stanford, G. (Ed.), How to handle the paper load: Classroom practices in teaching English 1979-1980 (pp. 91-94). Urbana, IL: National Council of Teachers of English.

Harris, Winifred H. (1977). Teacher response to student writing: A study of the response patterns of high school English teachers to determine the basis for teacher judgment of student writing. Research in the Teaching of English, 11(2), 175-185.

Harvey, Gordon. (2003). Repetitive strain: The injuries of respnding to student writing. ADE Bulletin, Nos. 134/135, 43-48.

Haswell, Richard H. (2005). Automated text-checkers: A chronology and a bibliography of commentary. Computers and Composition Online. http://www.bgsu.edu/cconline/haswell/haswell.htm.

Haswell, Richard H. (2006). Automatons and automated scoring: Drudges, black boxes, and dei ex machina. In Ericsson, Patricia Freitag, & Haswell, Richard H. (Eds.). Machine scoring of student essays: Truth and consequences (pp. 57-78). Logan, UT: Utah State University Press.

Haswell, Richard H. (1983). Minimal marking. College English, 45(6), 600-604.

Haswell, Richard H., & Blalock, Glenn. (2006). CompPile: An ongoing inventory of publications in post-secondary composition, rhetoric, ESL, technical writing, and discourse studies. http://comppile.tamucc.edu./

Haswell, Richard H., & Lu, Min-Zhan. (2000). Comp tales: An introduction to college composition throught its stories. New York: Longman.

Hawhee, Debra. (1999). Composition history and the Harbrace College Handbook. College Composition and Communication, 50(3), 504-523.

Hayes, Christopher G., Simpson, Michele L., & Stahl, Norman A. (1994). The effects of extended writing on students' understanding of content-area concepts. Research and Teaching in Developmental Education, 10(2), 13-34.

Hayes, Mary F., & Daiker, Donald A. (1984). Using protocol analysis in evaluating responses to student writing. Freshman English News, 13(2), 1-4, 10.

Hillocks, George, Jr. (1982). Getting the most out of time spent marking compositions. English Journal 71(6), 80-83.